What is Retrieval Augmented Generation?

What is Retrieval Augmented Generation?

Basically we give the Large Language Model the best chance of answering the question by giving the model the answer as well as the question.

What?

Bare with us, we're going to show how we can use documents you already have to make this happen automagically.

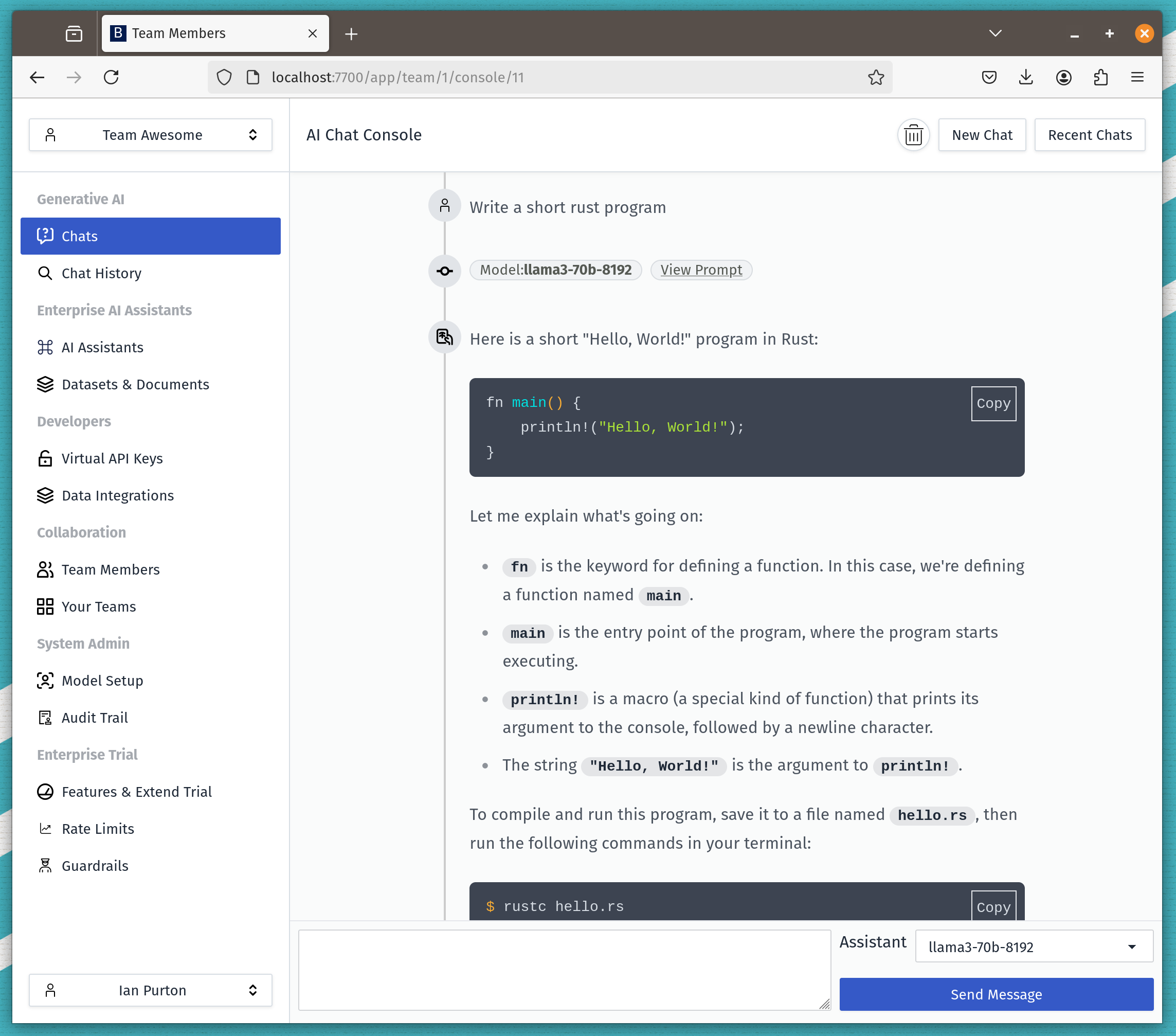

Talking directly with a Large Language Model

In this example we'll use a small language model at 7B parameters so you can follow along.

We'll remove all the frameworks and fluff so you can see exactly what gets sent to the model.

Run up a large language model API on your machine. (Assuming you have docker installed).

Running a local LLM

docker run -p 8080:8080 -it --rm ghcr.io/purton-tech/bionicgpt-model-api

Asking questions via the API

Let's ask our model a question it probably doesn't know the answer to.

curl http://localhost:8080/v1/completions \ -H "Content-Type: application/json" \ -d '{"model": "ggml-gpt4all-j", \ "prompt": "What is a Dobblenobble?" }'

And one possible response is below. Note: you might get a different response due to something called temperature.

The answer

As an AI language model, I do not have any information about the term "Dobblenobblê". It may be a misspelling or an outdated term. Can you please provide more context or information about what it means?

Next we'll ask the same question and give the LLM the answer.

The Question

curl http://localhost:8080/v1/completions \ -H "Content-Type: application/json" \ -d '{"model": "ggml-gpt4all-j", "prompt": "A Dobblenobble is a type of biscuit. What is a Dobblenobble?" }'

The Answer - Welsh Biscuits

A dobbler nobble is a type of biscuit that originated in Wales and is popular among Welsh people. It typically consists of oatmeal, sugar and butter flavoured with orange or lemon zest, and is often served with milk or a dollop of jam. The name "dobbler nobble" is believed to have originated from the fact that it was originally baked in a muffin tin, which resembled the shape of an old-fashioned dobbler, a type of small animal found in Welsh folklore.

I did not make this up. These are the answers I got

Hallucinating

So we gave the model the answer and it kind of fed it back to us but then completely went off on one. In the trade that's called hallucinating.

As far as I know

- A dobbler nobble is not a welsh biscuit. (although it sounds like it would taste delicious)

- There's no small animal in Wales called a dobbler.

A tiny amount of Prompt Engineering

When talking with an LLM we generally use a prompt template that gives the LLM an idea about how we want it to respond.

A typical prompt template consists of several elements that help define the context, the user's request, and any specific instructions. Here's an example of a prompt template, followed by an explanation of its different elements:

Context: You are a virtual assistant helping with travel information. User Request: Provide me with flight options from New York to Los Angeles. Instructions: Use the GPT-3.5 model to generate a concise response with at least three flight options. Include departure times, airlines, and prices.

-

Context: This element sets the stage for the conversation or the scenario in which the user's request is made. It provides important context that helps the model understand the context and generate relevant responses. In this example, the context informs the model that it's acting as a virtual assistant providing travel information.

-

User Request: This part of the template represents the user's input or query. It specifies what the user is asking or requesting from the model. In this case, the user is asking for flight options from New York to Los Angeles.

-

Instructions: Instructions are crucial in guiding the model on how to respond to the user's request. They provide specific details and constraints for generating the response. In this example, the instructions specify that the model should use the GPT-3.5 model and generate a concise response that includes at least three flight options, departure times, airlines, and prices.

By using a prompt template with these elements, you can structure your interactions with the model more effectively. The context sets the stage, the user request defines the user's intent, and the instructions guide the model on how to fulfil that intent. This structured approach helps improve the quality and relevance of the responses generated by the model.

Let's stop the hallucinating (hopefully)

Here's a prompt template for using context. I don't know if it's the best but this is sometimes more art than science.

Context information is below. Given the context information and not prior knowledge, answer the query. ### Prompt: {{.Input}} ### Context: {{.Data}} ### Response:

and if we punch in our question we get

Context information is below. Given the context information and not prior knowledge, answer the query. ### Prompt: What is a Dobblenobble? ### Context: A Dobblenobble is a type of biscuit. ### Response:

Let's send that and see what happens

Calling the LLM

curl http://llm-api:8080/v1/completions \ -H "Content-Type: application/json" \ -d '{"model": "ggml-gpt4all-j", "prompt": "Context information is below. Given the context information and not prior knowledge, answer the query. ### Prompt: What is a Dobblenobble? ### Context: A Dobblenobble is a type of biscuit.### Response:" }'

The Answer

A Dobblenobbl is a type of biscuit.

I'm not sure why it misspelled Dobblenobble, this might be an issue with using a model at only 7 billion parameters.

The retrieval part of things

We can condense data retrieval for LLM's into three key points:

-

Data Collection and Indexing:

- Gather and preprocess a dataset of relevant documents.

- Create an index for efficient retrieval.

-

Query and Retrieve:

- Formulate a query based on user input.

- Use an information retrieval model to retrieve top-k relevant documents.

-

Contextual Input to Generation:

- Pass the retrieved content as context to a generative model.

- Generate responses that are contextually relevant and coherent for the user.

Data Collection and Indexing

Assuming the information you have is stored in your companies documentation.

We can process that information in many ways, but let's just give you an opinionated answer.

- Use a tool such as Unstructured to rip text from your documents.

- Split this text into chunks that are less than 512 tokens.

- Store each chunk in a database.

Storing them in database

Chunks get stored into the database in their text form it's going to look something like

INSERT INTO documents (text) VALUES ('A Dobblenobble is a type of biscuit.');

The problem we now have is how do we retrieve this information when we want to add it to the prompt

SELECT * FROM documents WHERE text = 'What is a dobblenobble';

That query won't work.

Similarity search to the rescue

In postgres we can do the following assuming you have the pgVector extension.

ALTER TABLE documents ADD COLUMN embeddings vector(384);

Now we are able to do a similarity search which looks like

SELECT * FROM documents WHERE text embeddings <-> 'What is a dobblenobble';

And this will return our row because it's similar to the question.

Generating embeddings

How do we populate our embeddings column so we can do similarity search.

We can take our chunks of text and pass them to our LLM we have running locally.

curl http://localhost:8080/v1/embeddings \ -H "Content-Type: application/json" \ -d '{ "input": "A Dobblenobble is a type of biscuit.", "model": "text-embedding-ada-002" }'

This will return 384 floating point numbers. The 384 is the dimensions of our embedding returned by our locally running embeddings model.

The documentation for text-embedding-ada-002 states it should have 1536 dimension not sure why it doesn't.

What is RAG? - A final definition

So RAG is a process you follow

- Retrieve relevant information from your documents based on the question.

- Augment the prompt template so it has more context about the question

- Generate responses based on this augmented template.

After which your model should be able to answer questions it had no knowledge of before with a reduction in hallucinations.